Ordering a shirt online only to find it doesn’t fit or look the way we imagined. This frustration is exactly why AR try-on apps are reshaping the way people shop.

Instead of guessing, shoppers can use a virtual fitting room to see how clothes look in real time without going into a store.

A recent report shows that virtual try-on technology can improve conversion rates by up to 30% while reducing returns. That’s a win-win for both customers & businesses.

That’s why you should think about creating an augmented reality shopping app with AR functionality to try clothes online.

In this blog, we’ll try to showcase how to build your own AR try-on shopping app in Python with step-by-step code.

What Is an AR Try-On Shopping App? (And Why It is Important?)

An AR try-on shopping app lets customers try clothes, shoes, or accessories virtually using their camera.

Think of it as a virtual fitting room, where digital clothing is covered on a live video feed in real time.

Major brands are already leading the way:

- Amazon offers AR try-before-you-buy experiences.

- Nike, launched virtual sneaker try-on features.

- Gucci lets customers preview luxury items in an augmented reality shopping app.

Businesses that offer virtual try-on technology build trust, reduce returns, and increase sales. Developers who understand how to build these apps are in high demand.

Why Build It with Python? (The Developer’s Advantage)

If you’re a developer, Python is your best friend for AR projects. Why?

Because it’s simple, flexible, and comes with a massive set of libraries for computer vision and AR development.

- OpenCV helps with face and body tracking.

- Mediapipe makes real-time pose detection smooth.

- Open3D allows 3D object handling.

- With wrappers for ARKit/ARCore, you can bridge into mobile AR.

Combine it with AI/ML models, and you can create realistic AR clothing try-on apps that adapt to body shape and movement.

What is the Tech Stack You’ll Need?

Here’s the stack you’ll need to build a Python virtual try-on app:

- Python 3.13 is the foundation of the project.

- OpenCV face and body tracking.

- Mediapipe pose and landmark detection.

- TensorFlow / PyTorch is optional for deep learning clothing fit models.

- Streamlit / Flask web integration to make it interactive.

- GitHub version control and repo sharing (you’ll get access to our GitHub repo for the Python AR try-on app).

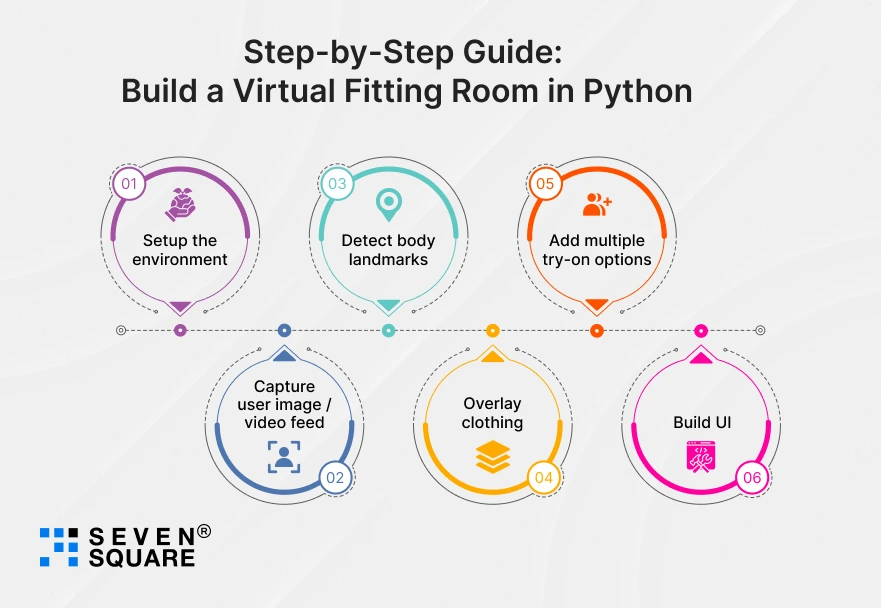

Step-by-Step Guide: Build a Virtual Fitting Room in Python

Here you can see all the steps to build a virtual fitting room app in python.

Step 1: Setup the environment

Open a terminal and run:

# create virtual env (optional)

python -m venv venv

# on Windows:

venv\Scripts\activate

# on macOS / Linux:

source venv/bin/activate

# install required libraries

pip install opencv-python mediapipe numpy pillow streamlit

These packages give you the core stack for a python virtual try-on tutorial with code: OpenCV for video manipulation, Mediapipe for pose detection, Pillow for PNG alpha handling, and Streamlit for a quick UI.

Step 2: Capture user image / video feed (OpenCV)

Below is a complete, runnable script that opens your webcam, detects body landmarks, and overlays a transparent clothing PNG onto the torso.

Save it as ar_tryon_live.py.

# ar_tryon_live.py

import cv2

import mediapipe as mp

import numpy as np

from PIL import Image

mp_pose = mp.solutions.pose

# helper: overlay a transparent RGBA PIL image on a BGR OpenCV image

def overlay_pil_on_cv2(bg_bgr, overlay_pil, x, y):

"""Overlay a PIL RGBA image onto OpenCV BGR background at (x,y) top-left."""

bg_rgb = cv2.cvtColor(bg_bgr, cv2.COLOR_BGR2RGB)

bg_pil = Image.fromarray(bg_rgb)

bg_pil.paste(overlay_pil, (int(x), int(y)), overlay_pil)

out = cv2.cvtColor(np.array(bg_pil), cv2.COLOR_RGB2BGR)

return out

# load sample clothing images (PNG with transparency)

# Provide multiple outfits — change paths or add more images.

CLOTHES = {

"red_tshirt": "assets/red_tshirt.png",

"blue_jacket": "assets/blue_jacket.png"

}

# preload PIL images

clothes_pil = {k: Image.open(v).convert("RGBA") for k, v in CLOTHES.items()}

def distance(p1, p2):

return np.linalg.norm(np.array(p1) - np.array(p2))

def main():

cap = cv2.VideoCapture(0)

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

selected_outfit = "red_tshirt"

outfit_names = list(CLOTHES.keys())

idx = 0

while True:

ret, frame = cap.read()

if not ret:

break

# flip for mirror view

frame = cv2.flip(frame, 1)

h, w, _ = frame.shape

# Mediapipe works on RGB images

img_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

results = pose.process(img_rgb)

if results.pose_landmarks:

lm = results.pose_landmarks.landmark

# Mediapipe landmark indexes for shoulders & hips

# LEFT_SHOULDER = 11, RIGHT_SHOULDER = 12, LEFT_HIP = 23, RIGHT_HIP = 24

left_sh = (int(lm[11].x * w), int(lm[11].y * h))

right_sh = (int(lm[12].x * w), int(lm[12].y * h))

left_hip = (int(lm[23].x * w), int(lm[23].y * h))

right_hip = (int(lm[24].x * w), int(lm[24].y * h))

# center of shoulders

shoulders_center = ((left_sh[0] + right_sh[0]) // 2, (left_sh[1] + right_sh[1]) // 2)

hips_center = ((left_hip[0] + right_hip[0]) // 2, (left_hip[1] + right_hip[1]) // 2)

# estimate clothing width & height

shoulder_width = distance(left_sh, right_sh)

torso_height = distance(shoulders_center, hips_center)

# scaling factors (tweak for look)

w_cloth = int(shoulder_width * 1.4)

h_cloth = int(torso_height * 1.3)

# top-left position for clothing overlay

x = int(shoulders_center[0] - w_cloth / 2)

y = int(shoulders_center[1] - 0.2 * h_cloth) # slightly above shoulders center

# choose outfit

pil_cloth = clothes_pil[selected_outfit]

# resize clothing keeping aspect ratio

aspect = pil_cloth.width / pil_cloth.height

new_w = w_cloth

new_h = int(new_w / aspect)

pil_resized = pil_cloth.resize((max(1, new_w), max(1, new_h)), Image.ANTIALIAS)

# overlay

frame = overlay_pil_on_cv2(frame, pil_resized, x, y)

# UI instructions

cv2.putText(frame, "Press [n] to switch outfit, [q] to quit", (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 255), 2, cv2.LINE_AA)

cv2.imshow("AR Try-On - Python (Press q to quit)", frame)

key = cv2.waitKey(1) & 0xFF

if key == ord("q"):

break

elif key == ord("n"):

idx = (idx + 1) % len(outfit_names)

selected_outfit = outfit_names[idx]

cap.release()

cv2.destroyAllWindows()

if __name__ == "__main__":

main()

Notes:

- Put transparent PNG clothing images in assets/ (e.g., assets/red_tshirt.png, assets/blue_jacket.png). The PNG should have an alpha channel and be roughly full-body or upper-torso sized.

- The overlay mapping in this script uses shoulder and hip landmarks (Mediapipe). It scales clothing based on shoulder width and torso height. This is a simple, robust mapping for python augmented reality clothing try-on demos.

Step 3: Detect body landmarks (Mediapipe)

The script already uses mediapipe.solutions.pose which returns landmarks like shoulders and hips.

That’s the heart of the step-by-step AR try-on app in python & use these landmarks to locate and scale the AR clothing.

Key landmarks used:

- LEFT_SHOULDER (index 11)

- RIGHT_SHOULDER (index 12)

- LEFT_HIP (23)

- RIGHT_HIP (24)

Using these you can:

- find torso center

- compute shoulder width

- estimate torso height

This simple approach handles body rotation reasonably well for frontal or near-frontal user positions.

Step 4: Overlay clothing (AR layer)

The overlay function uses Pillow to preserve transparency. Main ideas:

- Load PNG with alpha (RGBA).

- Resize according to shoulder width and torso height.

- Compute top-left (x,y) and paste the PNG on the frame using alpha compositing.

That is shown in the overlay_pil_on_cv2 helper in ar_tryon_live.py.

This method keeps the clothing visually clean and is suitable for python virtual try-on tutorial with code.

Step 5: Add multiple try-on options

The live script includes 2 outfits and uses [n] key to switch. For a blog demo you can show how to add outfits:

CLOTHES = {

"red_tshirt": "assets/red_tshirt.png",

"blue_jacket": "assets/blue_jacket.png",

"green_shirt": "assets/green_shirt.png",

# add more PNGs here

}

In a web UI (see Streamlit below) let the user pick from a dropdown or upload their own PNG (file uploader).

That gives a real virtual try-on app feel and demonstrates multiple try-on options.

Step 6: Build UI (Streamlit / Flask)

Here are two straightforward ways to make a simple web demo quickly.

1. Simple Streamlit demo (image snapshot flow)

This uses st.camera_input() (requires the user to grant a camera and take a snapshot). Save as streamlit_app.py.

# streamlit_app.py

import streamlit as st

from PIL import Image

import numpy as np

import io

import mediapipe as mp

import cv2

st.set_page_config(page_title="Python AR Try-On Demo")

st.title("Python AR Try-On Demo (Snapshot mode)")

st.write("Take a photo or upload an image. Select an outfit to try on.")

uploaded = st.file_uploader("Upload an image (optional)", type=["jpg","png","jpeg"])

cam_image = st.camera_input("Or take a picture")

# pick outfit

outfit = st.selectbox("Choose outfit", ["red_tshirt", "blue_jacket"])

# mapping from outfit name to local path

CLOTHES = {"red_tshirt": "assets/red_tshirt.png", "blue_jacket": "assets/blue_jacket.png"}

def overlay_pil_on_cv2(bg_bgr, overlay_pil, x, y):

bg_rgb = cv2.cvtColor(bg_bgr, cv2.COLOR_BGR2RGB)

bg_pil = Image.fromarray(bg_rgb)

bg_pil.paste(overlay_pil, (int(x), int(y)), overlay_pil)

out = cv2.cvtColor(np.array(bg_pil), cv2.COLOR_RGB2BGR)

return out

def process_image(image_bytes, outfit_path):

# convert to cv2 image

image = Image.open(io.BytesIO(image_bytes)).convert("RGB")

frame = cv2.cvtColor(np.array(image), cv2.COLOR_RGB2BGR)

h, w, _ = frame.shape

mp_pose = mp.solutions.pose

with mp_pose.Pose(static_image_mode=True, min_detection_confidence=0.5) as pose:

img_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

res = pose.process(img_rgb)

if not res.pose_landmarks:

return image # return original if no detection

lm = res.pose_landmarks.landmark

left_sh = (int(lm[11].x * w), int(lm[11].y * h))

right_sh = (int(lm[12].x * w), int(lm[12].y * h))

left_hip = (int(lm[23].x * w), int(lm[23].y * h))

right_hip = (int(lm[24].x * w), int(lm[24].y * h))

shoulders_center = ((left_sh[0] + right_sh[0]) // 2, (left_sh[1] + right_sh[1]) // 2)

hips_center = ((left_hip[0] + right_hip[0]) // 2, (left_hip[1] + right_hip[1]) // 2)

shoulder_width = np.linalg.norm(np.array(left_sh) - np.array(right_sh))

torso_height = np.linalg.norm(np.array(shoulders_center) - np.array(hips_center))

w_cloth = int(shoulder_width * 1.4)

pil_cloth = Image.open(outfit_path).convert("RGBA")

aspect = pil_cloth.width / pil_cloth.height

new_w = w_cloth

new_h = int(new_w / aspect)

pil_resized = pil_cloth.resize((max(1, new_w), max(1, new_h)), Image.ANTIALIAS)

x = int(shoulders_center[0] - new_w / 2)

y = int(shoulders_center[1] - 0.2 * new_h)

out = overlay_pil_on_cv2(frame, pil_resized, x, y)

return Image.fromarray(cv2.cvtColor(out, cv2.COLOR_BGR2RGB))

# decide image source

if cam_image:

bytes_data = cam_image.getvalue()

elif uploaded:

bytes_data = uploaded.read()

else:

st.info("Take a picture or upload to try an outfit.")

st.stop()

result_img = process_image(bytes_data, CLOTHES[outfit])

st.image(result_img, caption="Try-On Result", use_column_width=True)

st.markdown("**Tip:** Upload a front-facing photo for the best fit. For a real-time experience see the desktop script.")

Run:

streamlit run streamlit_app.py

This gives a quick python virtual try-on example code running in the browser (snapshot mode).

For real-time webcam in web, consider streamlit-webrtc package (more setup) or build a Flask MJPEG endpoint.

2. Notes for a Flask + MJPEG real-time web streaming (outline)

- Use Flask to serve an MJPEG stream from OpenCV (similar to the desktop script capture loop).

- Serve HTML with

that displays the real-time AR overlay.

- This is recommended for production-like demos, but it’s more complex (CORS, SSL for camera access on mobile, mobile vs desktop differences).

Here’s the Complete Code to Build an AR Try-On Shopping App in Python.

What Are the Real-World Use Cases & Business Benefits?

So, why should businesses care about an AR try-on app? Because the impact is real.

- Fashion brands: Improve sales and reduce returns by offering a virtual fitting room experience.

- Small businesses: Compete with industry giants by integrating augmented reality shopping apps without heavy investment.

- Developers: Monetize by building virtual try-on technology solutions for clients in retail, fashion, and even eyewear.

It’s because shoppers want to know how virtual try-on works before they trust a product.

Why Do Businesses Trust Us for AR Shopping App Solutions?

Whether you’re a fashion brand, a startup, or an online retailer, we’ve mastered how to build a virtual fitting room app in Python that customers love.

- Python Expertise: We use OpenCV, Mediapipe, and AI/ML models to create realistic AR try-on apps that adapt to body movements.

- Virtual Fitting Room Solutions: From simple virtual try-on tutorials with code to advanced custom apps, we deliver AR that fits your business goals.

- Step-by-Step Development: We guide you through every phase, from code to a live augmented reality shopping app with GitHub repo support.

- Business-Focused Approach: We help brands boost sales, reduce returns, and stand out with virtual try-on technology.

- End-to-End Delivery: From concept to deployment, we handle everything: Python backend, AR overlays, and web integration with Flask or Streamlit.

Want an AR try-on app? Contact Us Now!

Your First Step into Building AR Shopping App

Now you can build an AR try-on shopping app in Python, from the tech stack to the code walkthrough.

You can easily grow into a real augmented reality shopping app for your business or clients.

Start simple, test your ideas, and then scale to full eCommerce integration. The future of shopping is now how you build AR try-on-app.

FAQs

- Yes, with Python, OpenCV, and Mediapipe, you can create a virtual fitting room app that works as an AR try-on app with real-time clothing overlays.

- The top Python libraries for AR try-on are OpenCV for tracking, Mediapipe for pose detection, and Open3D for handling 3D clothes in a python virtual try-on tutorial.

- OpenCV is powerful, but for full AR shopping apps, you’ll also need Mediapipe and optionally TensorFlow/PyTorch to improve authenticity in a python augmented reality clothing try-on.

- Yes, you can build the backend in Python and connect APIs to WooCommerce to turn your project into a real AR try-on app for online shopping.